Building AI text detection that explains itself

The fundamental problem with most AI text detectors is that they're black boxes pretending to solve a trust problem. You paste in some text, you get back a number—"87% AI-generated"—and you're supposed to just believe it. No explanation of why. No indication of which parts. No way to interrogate the verdict. You're asked to trust a system that offers you zero transparency, to make a judgment about whether you should trust another system's output. The irony is thick.

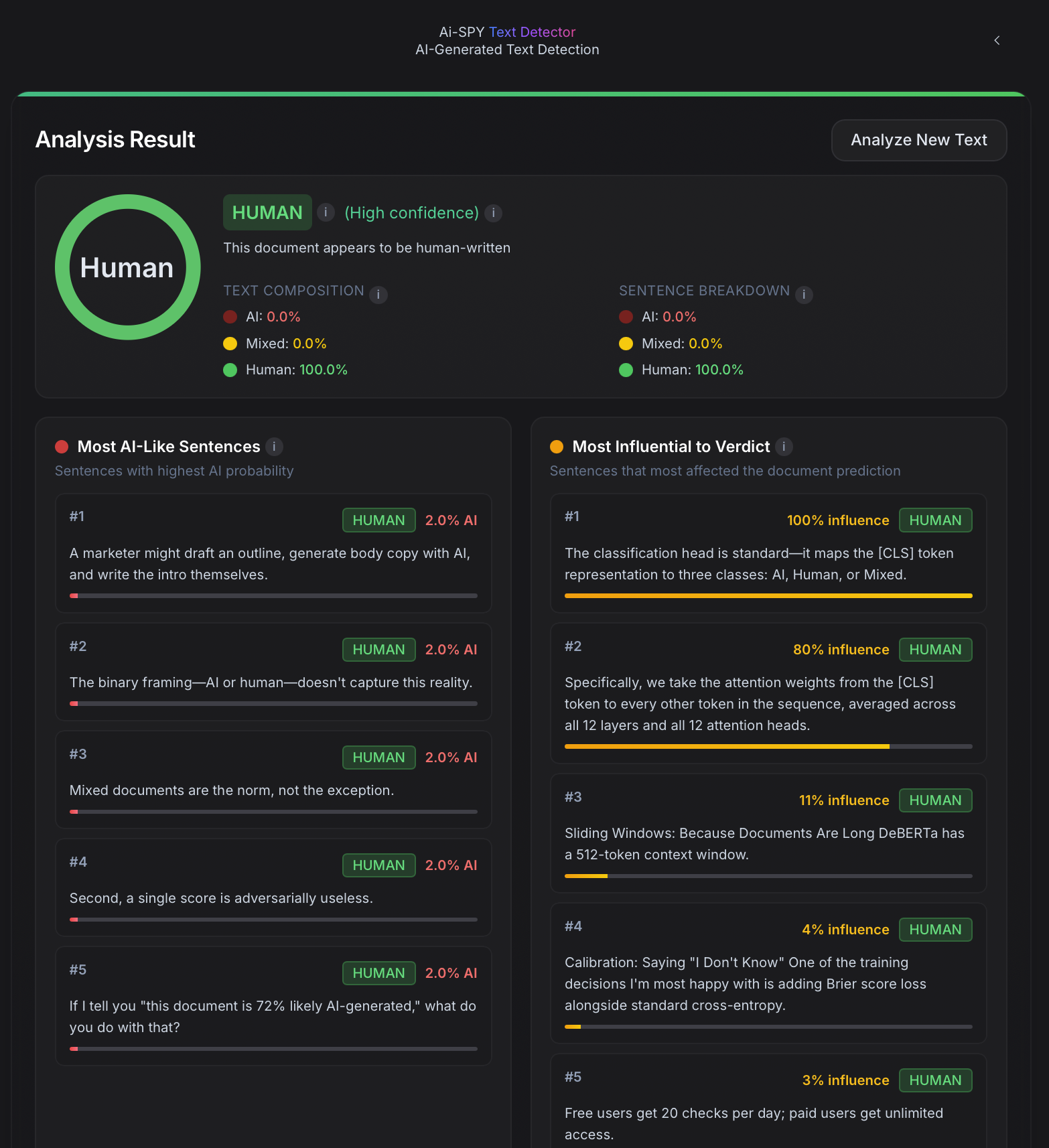

When we started building AI-SPY's text detection, we knew the classifier itself was table stakes. The hard part—the part that actually matters—is making the result legible. Not just "is this AI?" but "which sentences are AI, how confident are we, and what evidence supports that conclusion?"

Why Single-Score Detectors Fail

The standard approach to AI text detection is straightforward: fine-tune a transformer on a binary classification task (AI vs. human), feed it text, get a probability. It works well enough in benchmarks. It falls apart in practice for a few reasons.

First, real-world text is rarely pure. A student might write an essay, then run three paragraphs through ChatGPT to polish them, then edit the output by hand. A marketer might draft an outline, generate body copy with AI, and write the intro themselves. The binary framing—AI or human—doesn't capture this reality. Mixed documents are the norm, not the exception.

Second, a single score is adversarially useless. If I tell you "this document is 72% likely AI-generated," what do you do with that? You can't act on it. You can't point to the problematic sections. You can't have a conversation with the person who submitted it. The score creates suspicion without providing evidence.

Third, confidence without calibration is dangerous. Most detectors are dramatically overconfident. They'll tell you something is "95% AI" when the model has never seen text quite like it before. A well-calibrated model should know when it doesn't know.

The Architecture: Attention as Explanation

Here's what that looks like under the hood. The [CLS] token's attention to each word in the sequence becomes a direct readout of what the model found diagnostic.

At the core of AI-SPY's detection engine is a custom model built on DeBERTa V3-Small with two heads: one for classification, one for attribution. The classification head is standard—it maps the [CLS] token representation to three classes: AI, Human, or Mixed. The attribution head is where it gets interesting.

Instead of training a separate model to explain the classifier's decisions, we extract importance scores directly from the transformer's own attention mechanism. Specifically, we take the attention weights from the [CLS] token to every other token in the sequence, averaged across all 12 layers and all 12 attention heads. This gives us a per-token importance map that reflects which words and phrases the model actually attended to when making its classification decision.

This isn't post-hoc interpretability bolted on after the fact. It's the model's own internal signal, surfaced as a first-class output. The importance scores tell you: "these are the tokens I looked at most when deciding this was AI-generated." It's not a perfect explanation—attention isn't always faithful to the underlying computation—but it's a far more honest signal than a single number.

To keep the importance scores useful, we add sparsity regularization during training. Without it, attention tends to spread diffusely across the sequence, which produces importance maps that highlight everything and therefore explain nothing. The regularizer encourages the model to concentrate its attention on a smaller number of highly diagnostic tokens, making the attributions sharper and more actionable.

Sliding Windows: Because Documents Are Long

DeBERTa has a 512-token context window. Real documents are much longer than that. The naive solution is to truncate—just take the first 512 tokens and classify those. This is obviously terrible; it throws away most of the text and biases the detector toward introductions.

Our approach is a sliding window with overlapping stride. We chunk the document into 512-token windows with a 256-token stride, creating 50% overlap between consecutive chunks. Each chunk gets independently classified and scored for importance. Then we aggregate.

The aggregation is simple but deliberate. Classification probabilities are averaged across all chunks that contain a given region of text. Importance scores are mapped back to character positions using the tokenizer's offset mappings, then aligned to sentence boundaries. The result is a per-sentence probability and a per-sentence importance score, derived from the full document context.

The overlap is key. Without it, you get boundary artifacts—sentences that straddle chunk boundaries get misclassified because neither chunk sees them fully. The 50% overlap ensures every sentence appears in at least one chunk with full surrounding context.

Three Lenses on the Same Document

When you submit text to AI-SPY, you don't get a single number. You get three ranked lists, a composition donut chart, and a color-coded text view—all derived from the same underlying analysis.

-

Most AI-likely sentences. The top 5 sentences with the highest AI probability. These are the sentences the model thinks were most likely generated by an AI system.

-

Most influential sentences. The top 5 sentences by attention weight—the ones that most influenced the overall verdict. This is crucial because the most influential sentence isn't always the most AI-like sentence. Sometimes a single highly human sentence in an otherwise AI document is what pulls the confidence score down.

-

Most human-like sentences. The top 5 sentences with the lowest AI probability. These serve as a counterpoint, showing the user where the model sees authentic human writing.

We also show the full text with color-coded highlights—red for AI-likely, amber for influential—so you can see the structure of the document at a glance. A fully AI document lights up red. A mixed document shows patches. A human document stays clean.

On top of this, we compute two complementary metrics: character-level composition and sentence-level breakdown. Character-level tells you what percentage of the text by volume reads as AI. Sentence-level tells you what percentage of sentences by count are classified as AI. These can diverge meaningfully—a document might have only 2 AI sentences, but if they're long paragraphs, they could represent 60% of the text by character count.

Calibration: Saying "I Don't Know"

One of the training decisions I'm most happy with is adding Brier score loss alongside standard cross-entropy. Cross-entropy optimizes for correctness—it wants the model to assign high probability to the right class. Brier score optimizes for calibration—it wants the model's confidence to reflect its actual accuracy. When the model says "80% AI," it should be right about 80% of the time.

The practical effect is that AI-SPY produces genuinely uncertain predictions when the text is ambiguous. Mixed documents, heavily edited AI text, or human text that happens to be stylistically flat—these get moderate confidence scores instead of false certainty. We surface this explicitly in the UI with confidence badges: high (85%+), moderate (65-85%), and low (under 65%).

A detector that confidently wrong is worse than no detector at all. Calibration is what makes the difference between a tool you can rely on and a tool that creates false accusations.

The Full Stack

The detection model runs behind a FastAPI backend with a few guardrails worth mentioning. Text submissions are bounded between 500 and 50,000 characters. Rate limiting caps inference at 10 requests per minute per user. Free users get 20 checks per day; paid users get unlimited access.

The model itself is loaded lazily on the first request. DeBERTa V3-Small is small enough to run inference on modest hardware without a GPU, which keeps infrastructure costs low. The entire sliding window pipeline—tokenization, chunking, inference, aggregation, sentence alignment—runs in under a few seconds for typical documents.

On the frontend, the Next.js app handles validation before the request ever leaves the browser. Real-time word counts, character limits, and usage tracking give immediate feedback. Results render as an interactive breakdown with the donut chart, sentence lists, and highlighted text view described above.

What This Doesn't Solve

I want to be honest about the limitations. Attention-based attribution is indicative, not causal. The importance scores show where the model looked, not necessarily why. Two very different reasoning paths could produce the same attention pattern.

The three-class framing (AI/Human/Mixed) is better than binary, but it's still a simplification. In reality, there's a spectrum of AI involvement—from "AI wrote every word" to "AI suggested a synonym the human accepted." Our model doesn't capture that granularity.

And like all AI detectors, ours is in an arms race with the models it's trying to detect. As language models improve—as their output becomes more varied, more human-like, more stylistically diverse—detection gets harder. The detector's edge comes from patterns that are statistically real but perceptually invisible: subtle distributional signatures in token frequency, sentence structure, and lexical choice. Those patterns narrow over time.

Why It Matters

The point of AI-SPY's text detection isn't to be a lie detector. It's to be a conversation starter. When a teacher suspects a student used AI, a single percentage creates confrontation. A highlighted document with specific sentences and confidence levels creates dialogue. "Hey, these three sentences flagged as AI-likely with high confidence—can you walk me through your process here?"

That's the difference between a black box and a tool. A black box demands trust. A tool earns it by showing its work.

The detectors that win won't just be more accurate. They'll be more transparent.

And the ones that last won't just classify. They'll explain.

We ran this very article through AI-SPY. The verdict: 100% Human, high confidence. Make of that what you will.